book a demo

book a demo

book a demo

book a demo

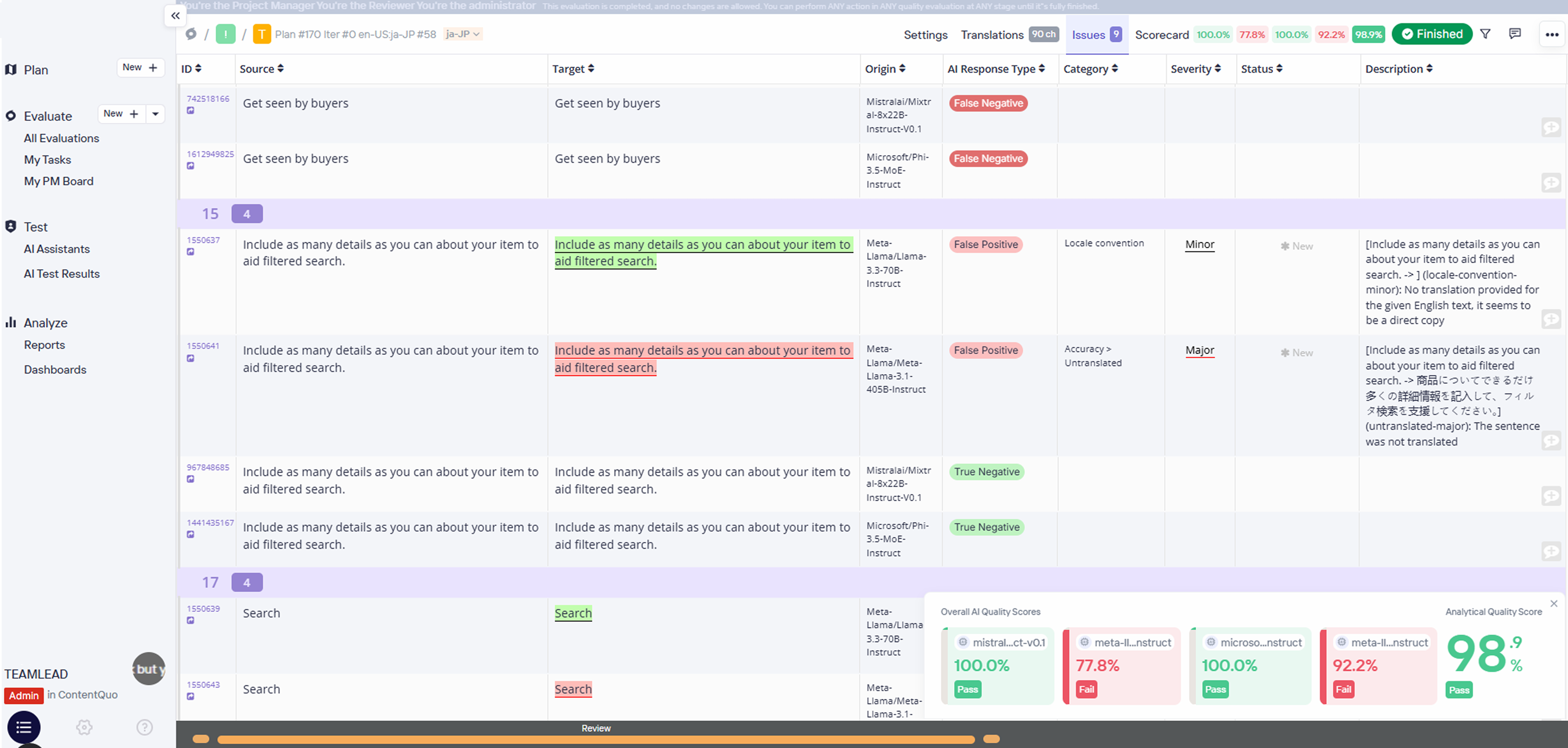

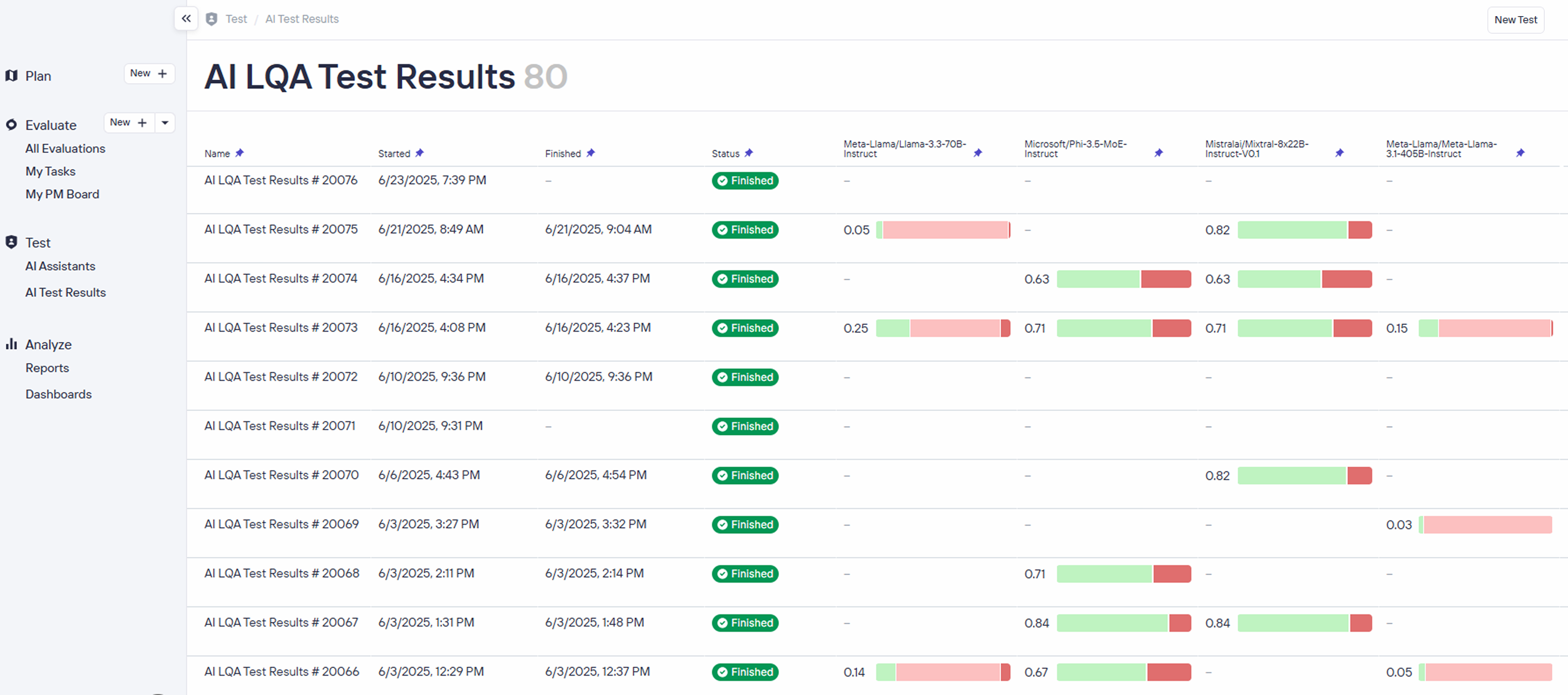

Compare multiple LLMs, prompts, and languages using your existing LQA setup.

Make AI LQA adoption decisions based on real metrics, not vendor claims.

Benchmark any AI LQA solution before you buy - at scale, automatically.

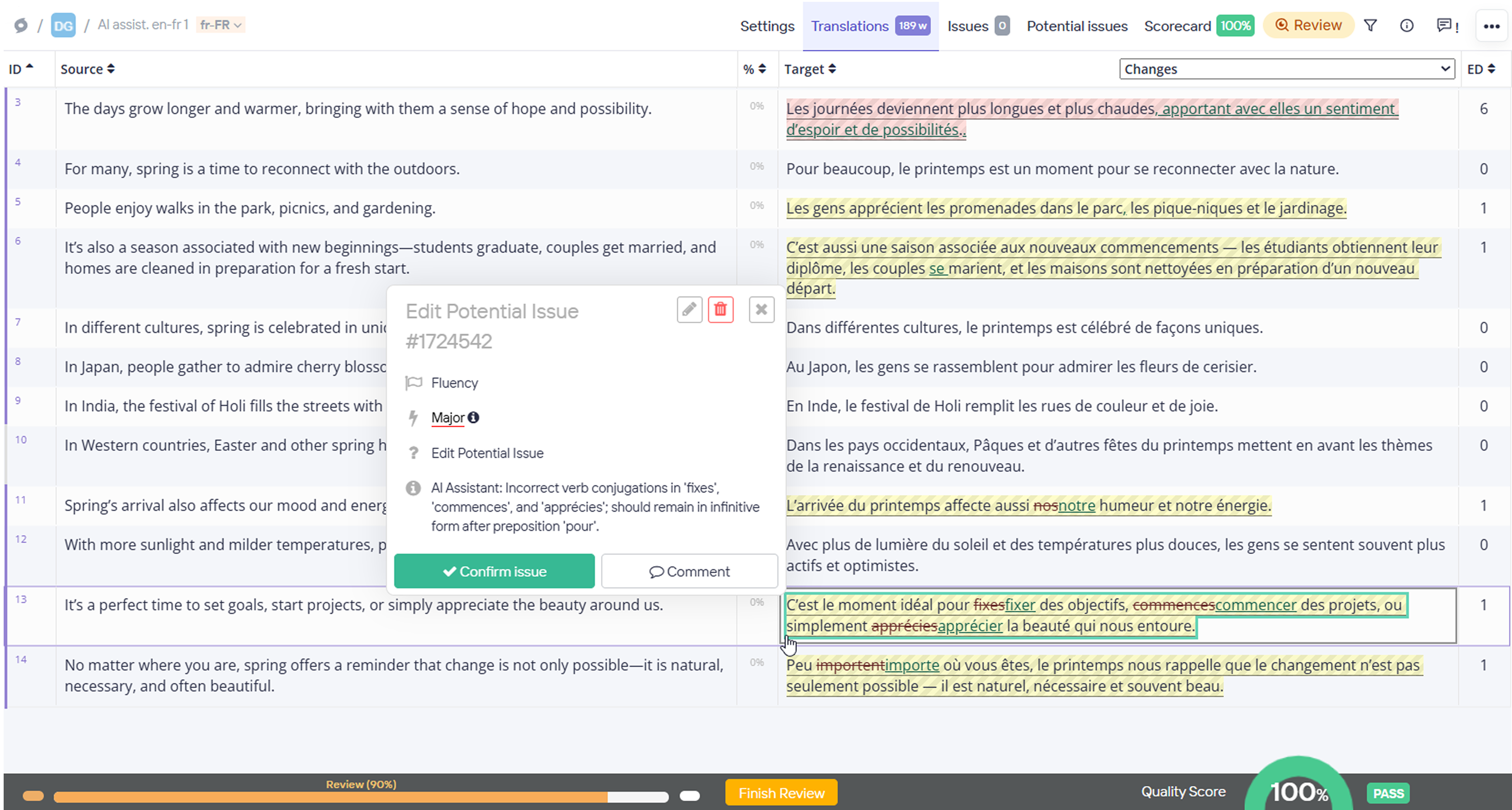

As LLM-assisted Translation Quality Evaluation (AI LQA) becomes part of the localization tech stack, it introduces a lot of uncertainty: which AI vendor to use, how it compares to human reviewers, and how it performs across languages, content types, and domains.

ContentQuo Test gives localization teams a dedicated, specialized benchmarking environment to answer these questions with clarity & precision – before and after deploying AI LQA into production.

.png)

Plug in any AI LQA engine built by any vendor based on any Language Model (OpenAI, Claude, Gemini, or any other). Compare side by side to find what works for your content, languages, and your definitions of language quality.

TALK TO OUR AI LQA EXPERT

TALK TO OUR AI LQA EXPERT